Paz Peña and Joana Varon

Case-study: Plataforma Tecnológica de Intervención Social*/Projeto Horus – Argentina and Brazil

Let’s say you have access to a database with information from 12,000 women between 10 and 19 years old, who are inhabitants of some poor province of South America. Datasets include age, neighborhood, ethnicity, country of origin, educational level of the household head, physical and mental disabilities, number of people sharing a house, and the presence or not of hot water in their services. What conclusions would you extract from such a database? Or, maybe the question should be: Is it even desirable to have any conclusion at all? Sometimes, and sadly more often than ever, just the possibility to extract sheer amounts of data is a good enough excuse to “make them talk” and, worst of all, take decisions based on that. The database described above is real. And it is used by public authorities, initially in the municipality of Salta, Argentina, piloted since 2015, under the name “Plataforma Tecnológica de Intervención Social” (“Technological Platform of Social Intervention”). Theoretically, the goal of the system was to prevent school dropouts and teenage pregnancy.

Who develops it?

The project started as a partnership with the Ministry of Early Childhood from the Province of Salta, Argentina, and Microsoft. The system is presented by a representative of both as a very accurate, almost magic, predictive tool: “Intelligent algorithms allow identifying characteristics in people that could end up in any of these problems and warn the government to work in their prevention”, said Microsoft Azure’s representative, the machine learning system of the program. “With technology, based on name, surname, and address, you can predict five or six years ahead which girl, future teenager, is 86% predestined to have a teenage pregnancy”, declared Juan Manuel Urtubey, a conservative politician and governor of Salta by the time of the pilot deployment.

System Audits and other criticisms

But predict… and even predestine someone for pregnancy is not that simple, not for mathematicians, neither for fortune-tellers. Not for less, criticism about the “Plataforma Tecnológica de Intervención Social” started to arise. Some called the system a lie, an intelligence that does not think, a hallucination, and a risk for poor women’s and children’s sensitive data. A very complete technical analysis about its failures was published by the Laboratorio de Inteligencia Artificial Aplicada (LIAA) from the University of Buenos Aires. According to LIAA, which analyzed the methodology posted on GitHub by Microsoft engineer, the results were falsely oversized due to statistical errors in the methodology; the database is biased; it does not take into account the sensitivities of reporting unwanted pregnancy, and therefore data collected is inadequate to make any future prediction and it is likely to include pregnancies from a particular sector of society them others, stigmatizing the poor. According to Luciana Ferrer, a researcher from LIAA:

“If you are assuming that those who answered the surveys said the truth about being pregnant before or at the moment of the survey, our data is likely to be inaccurate. On such a delicate topic as teenage pregnancy, it would be cautious to assume that many teenage girls do not feel safe to tell the truth, about all if they have or want to have an abortion (in Argentina, just like in several countries in Latin America, access to safe abortion was only legalized in cases of rape or when the mother’s health was at risk. The situation in the country changed only in December 2020, when a historical bill was approved legalizing freedom of choice to interrupt pregnancy to the 14th week). This implies that using these data, we will be learning from biased information, influenced by the fact that in some privileged sectors of the population there was to access save abortion and in others the issue is a taboo, therefore, it is something that the adolescent would hide in an interview.”

It is interesting to note that Ministry of Early Childhood worked for years with the anti-abortion NGO, Fundación CONIN, to showcase this system. Urtubey’s declaration mentioned about was made in the middle of the campaign to change the law towards legalizing abortion in Argentina, a social demand that in 2018 took over the local and international news for months. The idea that algorithms can predict teenage pregnancy before it happens was the perfect excuse for anti-women and anti-sexual and reproductive rights activists to declare safe abortion laws as unnecessary. According to their narratives, if they have enough information from poor families, conservative public policies can be deployed to predict and avoid abortions by poor women. Moreover, there was also a common, but mistaken, belief that “if it is recommended by an algorithm, it is mathematics, so it must be true and irrefutable.”

Furthermore, it is also notable to point out that the system has chosen to work on a database composed only of female data. This specific focus on a particular sex also reinforces patriarchal gender roles and, ultimately, blames female teenagers for unwanted pregnancy, as if a child could be conceived without a sperm. Even worse, it can also be seen as an initiative that departures from a logic of blaming the victim, particularly if we consider that the database includes girls aged 10 years old and of minors a bit older, whose pregnancy would only be a result of sexual violence. How can a machine say you are likely to be the victim of a sexual assault? And how brutal it is to conceive such calculus?

But, even in face of several criticisms, the initiative continued to be deployed. And worse, bad ideas dressed as innovation spread fast: the system is now being deployed in other Argentinian provinces, such as La Rioja, Chaco, and Tierra del Fuego. It also has been exported to Colombia, implemented at least in the municipality of La Guajira, and, as we will see, to Brazil.

From Argentina to Brazil

Another iteration of that same project has also reached the Brazilian Federal Government, through a partnership with the Brazilian Ministry of Citizenship and Microsoft. Allegedly, by September 2019, Brazil was the 5th country in Latin America to Projeto Horus, presented in the media a “tech solution to monitor social programs focused on child development.” The first city to test the program was Campina Grande, from the State of Paraíba, in the northeast region of Brazil, one of the poorest regions of the country. Among the authorities and institutions in the kick start meeting was a representative from Microsoft, the Ministry of Early Childhood from the municipality of Salta, and members from the Brazilian Ministry of Citizenship. Romero Rodrigues, the mayor of Campina Grande, is also aligned with evangelical churches.

Analysis

Through access to information requests (annex I), we have consulted the National Secretary of Early Childhood Care (SNAPI) and the Subsecretary of Information Technology (STI) from that Ministry to require more information about the partnership. These institutions informed that:

“The Ministry of Citizenship has signed with Microsoft Brasil LTDA the technical cooperation agreement n° 47/2019, for a proof of concept for an artificial intelligence tool to subsidize improvements in the actions of the program Happy Child/Early Childhood (Criança Feliz/Primeira Infância).”

The Brazilian Minister of Citizenship, who signed the agreement, is Osmar Gasparini Terra who, believe it or not, is a sympathizer of flat Earthism, meaning, a theory that doesn’t believe that the Earth has a spherical format. Just as climate change denial and creationism, some alleged that flat Earth theory has its base on Christian fundamentalism. Terra also had a denialist discourse about COVID-19 pandemic, but, in that case, he believed in Math and A.I. as a sole tool to produce diagnostics to inform public policies, as expressed in the agreement:

“The Ministry wishes to carry out an analysis for the Criança Feliz program, using technological data processing tools based on artificial intelligence as a diagnostic mechanism aimed at detecting situations of social vulnerability as a guide for the formulation of preventive and transformative public policies.”

Just like in the Chilean and Argentinian cases, a neoliberal vision was behind the rationale to believe in an algorithm to “automate, predict, identify, surveil, detect, target and punish the poor.” Something that was also explicit in the agreement when it states that the purpose of the system was to:

“optimize resources and the construction of initiatives that can improve the offer of services aimed at early childhood, in a more customized way and with greater effectiveness.“

Therefore, stating once again the logic of automating neoliberal policies. More specifically, establishing a mechanism for a Digital Welfare State, heavily dependent on data collection and the conclusions that emerge from them:

“The cooperation aims to build, together, a solution that collects data through electronic forms and the use of analytical and artificial intelligence tools on this data to subsidize actions of the Happy Child program.”

When inquired about databases used, they listed:

- Sistema Único de Assistência Social – SUAS (Unique System of Social Assistance)

- Cadastro Único

- CADSUAS, from the Ministry of Social Development

All of them are databases of social programs in Brazil, in this case, used under the logic of surveillance of vulnerable communities, who are not only poor but also of children. All that in a partnership with a foreign company. Did Microsoft have access to all these databases? What was to counterpart for the company to enter the agreement as no transfer of financial resources were agreed:

“This Agreement will not involve the transfer of financial resources between the parties. Each party will assume its own costs as a result of the resources allocated in the execution of the scope and its attributions, with no prior obligation to assume obligations based on its results.”

Did Microsoft have access to the database of the Brazilians? Is Microsoft using databased from the poor in Latin America for training their machine learning systems? We tried to schedule an interview with a representative from Microsoft in Brazil who was talking about the project in the media, but after we sent the questions, the previously scheduled interview was canceled. These were the questions sent to Microsoft:

1) Considering the technical cooperation agreement signed in September 2019 between the Ministry of Citizenship and Microsoft to carry out a proof of concept to implement artificial intelligence tools that support improvements in the actions of the Happy Child program:

a) What is the result of the proof of concept?

b) What datasets were used by the algorithm to detect situations of social vulnerability?

c) What kind of actions would be suggested by the platform in case of vulnerability and risk detection?

d) What are the next steps in this proof of concept?

2) Does the company have others contacts with the Ministry of Citizenship or other Ministries of the Brazilian government for proof of concepts or implementation of artificial intelligence projects for social issues?

3) Does the company have an internal policy to promote research and development of activities of AI and the public sector focused on the country?

As we could not have a position from Microsoft, we also asked the Ministry for more information about the promised “greater effectiveness”, which, nevertheless, was never proved. When the agreement was signed, back in September 2019, there was already a lot of criticism published analyzing the case of Salta, even though the agreement only recognized Microsoft “experience and intelligence gained”, as per below:

“Whereas Microsoft has already developed a similar project (…) with the PROVINCE OF SALTA, in the Argentine Republic, and all the experience and intelligence gained from it can be used, with this agreement, a cooperation is established for development, adaptation and use of a platform in Brazil.”

So we requested information from the Minister about error margins and data about the result of the proof of concept. They informed:

“there is no information regarding the margin of error used in the technologies involved.”

They also affirmed that the agreement lasted 6 months, from September 23rd, 2019 onwards. Therefore, the agreement was no longer in force by the time of the answer (December, 18th, 2020), as such they restated:

“we emphasize that such technology is not being implemented by the Happy Child Program, therefore, we are unable to meet the request for statistical data on its use and effectiveness.”

That was a weird affirmation, considering that in the work plan, attached to the agreement, both, Microsoft and the Ministry were assigned to the activities pertaining to the analysis and evaluation of results. But under these circumstances and answers, we could say that the attempt to transpose the system to Brazil was another expression of colonial extractivism once, allegedly, not even the Brazilian government kept records of the results of the proof of concept, a closed box. The only thing we know is that, according to the agreement, Microsoft was exempt from any responsibility of possible harm caused by the project:

“Microsoft does not guarantee or assume responsibility for losses and damages of any kind that may arise, by, for example: (i) the adequacy of the activities provided in this Agreement and the purposes of the Ministry or for the delivery of any effective solution; and (ii) the quality, legality, reliability and usefulness of services, information, data, files, products and any type of material used by the other parties or by third parties.

Conclusions

The A.I. systems examined in the case studies show that their design and use by states respond to a continuum of neoliberal policies that have abounded in Latin America, to varying degrees, during the last 40 years (López, 2020). On the one hand, they are instruments that automate and grant the degree of technological resolutions to ideological decisions: resources’ focalization (Alston, 2019). In this case, it is mainly about using big data to produce a more detailed category of poor children and adolescents (López, 2020) and, a step further, automate their social risk determination. The vulnerable childhood approach is a classic neoliberal take, enforced by entities like the World Bank in the region, and it comes from the idea of poverty as an individual problem (not a systemic one) and caseworkers as protectors of people “at-risk” (Muñoz Arce, 2018).

On the other, these A.I. systems are a new state in the technocratization of public policies. The degrees of participation in their design and the process’s transparency is doubtful, in the case of Brazil, the proof of concept agreement had a 6 months work plan, which makes evident that there was no intent to conceive a broader inclusive process with the targeted community, solely deploy a tech tool. So, not only Brazilian citizens have no access to any data assessing the pilot, but also people affected by these systems, that is, poor children, adolescents, and their families, are not even the subject of consultation since they are not recognized as interested parties. Likewise, the consent to use your data submitted to get or not social benefits opens a whole discussion about the ethics of these systems that have not been resolved.

Likewise, there is evidence that the use of A.I. to predict possible vulnerabilities not only does not work well in the social care of children and adolescents (Clayton et al., 2020), but also it ends up being quite costly for the States, at least in the firsts stages which seems to be contrary to the neoliberal doctrine (Bright et al. 2019). How many hours of human resources of public officials were deployed to a proof of concept in which results are not documented and publicly available?

Although there is the notion of technologies as a sociotechnical system, AI’s conception of an objective process prevails, both in data collection and processing. This is concerning in several ways. First, because states and developers pay little attention to bias in social class and race, repeating the racial idea of colorblindness (Noble, 2018; Benjamin, 2019). Second, technology’s errors in the risk prediction amongst children and adolescents are expected to be shielded by human intervention, giving the machine some impunity to continue working. However, there are no field studies in the case we have examined that consider how caseworkers who interact with the machine deal or not with “automation bias” referred to the higher valorization of automated information than our own experiences (Bridle, 2018).

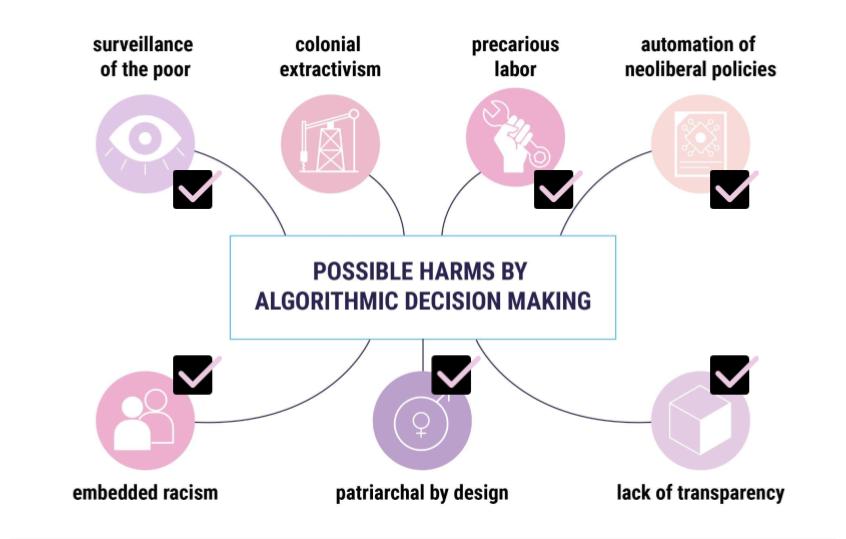

In summary, we can say that The “Plataforma Tecnológica de Intervención Social” and Projeto Horus are just a very eloquent example of how Artificial Intelligence’s pretended neutrality has been increasingly deployed in some countries in Latin America to assist potentially discriminatory public policies that could undermine the human rights of unprivileged people, as well as monitor and censor women and their sexual and reproductive rights. Analyzing our framework from Oppressive A.I. we could say it ticks all the boxes:

Links of transparency requests and answers from the brazilian government

- Pedido&resposta_LAI_MinCidadania_71003_129432_2020_71

- Acordo_Cooperacao_Tecnica_47__Microsoft

- Pedido&resposta_LAI_MinCidadania_71003_129428_2020_11

- Pedido&resposta_LAI_MinEcon_00106_030439_2020_31

- Pedido&resposta_LAI_MMFDH_00105_003197_2020_12

* The extract of the Argentinian component of this case study was developed and originally publish in the article “Decolonize AI: a feminist critique towards data and social justice”, written by the same authors of this report for the publication Giswatch: Artificial Intelligence Human Rights 2019, available at: https://www.giswatch.org/node/6203

Bibliography

Alston, Philip. 2019. Report of the Special rapporteur on extreme poverty and human rights. Promotion and protection of human rights: Human rights questions, including alternative approaches for improving the effective enjoyment of human rights and fundamental freedoms. A/74/48037. Seventy-fourth session. Item 72(b) of the provisional agenda.

Benjamin, R. (2019). Race after technology: Abolitionist tools for the new Jim code. Polity.

Birhane, Abeba (2019, July 18th). The Algorithmic Colonization of Africa. Real Life Magazine. www.reallifemag.com/the-algorithmic-colonization-of-africa/

Bridle, J. (2018). New Dark Age: Technology, Knowledge and the End of the Future. Verso Books.

Bright, J., Bharath, G., Seidelin, C., & Vogl, T. M. (2019). Data Science for Local Government (April 11, 2019). Available at SSRN: https://ssrn.com/abstract=3370217 or http://dx.doi.org/10.2139/ssrn.3370217

Clayton, V., Sanders, M., Schoenwald, E., Surkis, L. & Gibbons, D. (2020) MACHINE LEARNING IN

CHILDREN’S SERVICES SUMMARY REPORT. What Works For Children’s Social Care. UK.

Crawford, Kate. (2016, June 25th). Artificial Intelligence’s White Guy Problem. The New York Times. www.nytimes.com/2016/06/26/opinion/sunday/artificial-intelligences-white-guy-problem.htm

Daly, Angela et al. (2019). Artificial Intelligence Governance and Ethics: Global Perspectives. THE CHINESE UNIVERSITY OF HONG KONG FACULTY OF LAW. Research Paper No. 2019-15.

Davancens, Facundo.Predicción de Embarazo Adolescente con Machine Learning: https://github.com/facundod/case-studies/blob/master/Prediccion%20de%20Embarazo%20Adolescente%20con%20Machine%20Learning.md

Eubanks, V. (2018). Automating inequality: how high-tech tools profile, police, and punish the poor. First edition. New York, NY: St. Martin’s Press.

Kidd, Michael (2016). Technology and nature: a defence and critique of Marcuse. Volum IV, Nr. 4 (14), Serie nouă. 49-55. www.revistapolis.ro/technology-and-nature-a-defence-and-critique-of-marcuse/

Laboratorio de Inteligencia Artificial Aplicada. 2018. Sobre la predicción automática de embarazos adolescentes. www.dropbox.com/s/r7w4hln3p5xum3v/[LIAA]%20Sobre%20la%20predicci%C3%B3n%20autom%C3%A1tica%20de%20embarazos%20adolescentes.pdf?dl=0 y https://liaa.dc.uba.ar/es/sobre-la-prediccion-automatica-de-embarazos-adolescentes/

López, J. (2020). Experimentando con la pobreza: el SISBÉN y los proyectos de analítica de datos en Colombia. Fundación Karisma. Colombia.

Masiero, Silvia & Das, Soumyo (2019). Datafying anti-poverty programmes: implications for data justice. Information, Communication & Society, 22:7, 916-933.

Muñoz Arce , G. (2018): The neoliberal turn in Chilean social work: frontline struggles against

individualism and fragmentation, European Journal of Social Work, DOI:

10.1080/13691457.2018.1529657

Noble, S. U. (2018). Algorithms of oppression: How search engines reinforce racism. New York University Press.

O’Neal, Cathy. (2016). Weapons of Math Destruction: How Big Data Increases Inequality and Threatens Democracy. New York: Crown.