Joana Varon and Paz Peña

Notmy.ai is an ongoing effort, work in progress debate that seeks to contribute to the development of a feminist framework to question algorithmic decisions making systems that are being deployed by the public sector. Our ultimate goal is to develop arguments and build bridges for advocacy with different human rights groups, from women’s rights defenders to LGBTIQ + groups, especially in Latin America to address the trend in which governments are adopting A.I. systems to deploy social policies, not only without considering human rights implications but also in disregard of oppressive power relations that might be amplified through a false sense of neutrality brought by automation. Automation of the status quo, pertained by inequalities and discrimination.

The hype A.I. systems foster a recurrent narrative that automated decision-making processes can work like a magic wand to “solve” social, economic, environmental, and political problems, leading to expenditures of public resources in questionable ways, allowing already monopolistic private companies to exploit sensitive citizen data for profit and without constraints. These systems tend to be developed by privileged demographics, against the free will and without the opinion or participation from scratch of those who are likely to be targeted, or “helped”, resulting in automated oppression and discrimination from Digital Welfare States that use Math as an excuse to skip any political responsibility. Ultimately, this trend has the power to dismiss any attempt of a collective, democratic and transparent response to core societal challenges.

To face this pervasive trend, we depart from the perspective that decolonial feminist approaches to life and technologies are great instruments to envision alternative futures and to overturn the prevailing logic in which A.I. systems are being deployed. As Silvia Rivera Cusicanqui poses: “How can the exclusive, ethnocentric “we” be articulated with the inclusive “we”—a homeland for everyone—that envisions decolonization? How have we thought and problematized, in the here and now, the colonized present and its overturning?” If we follow Cusicanqui, it is easy to grasp that answers such as “optimization of biased algorithms”, “ethic”, “inclusive”, “transparent” or “human-centric” A.I., “compliant with data protection legislation” or even solely a human rights approach to A.I. systems fall short in a bigger political mission to dismantle what black feminist scholar Patricia Hill Collins calls the matrix of domination. Simply adding a layer of automation to a failed system means hiddenly magnifying oppression disguised by a false sense of mathematical neutrality.

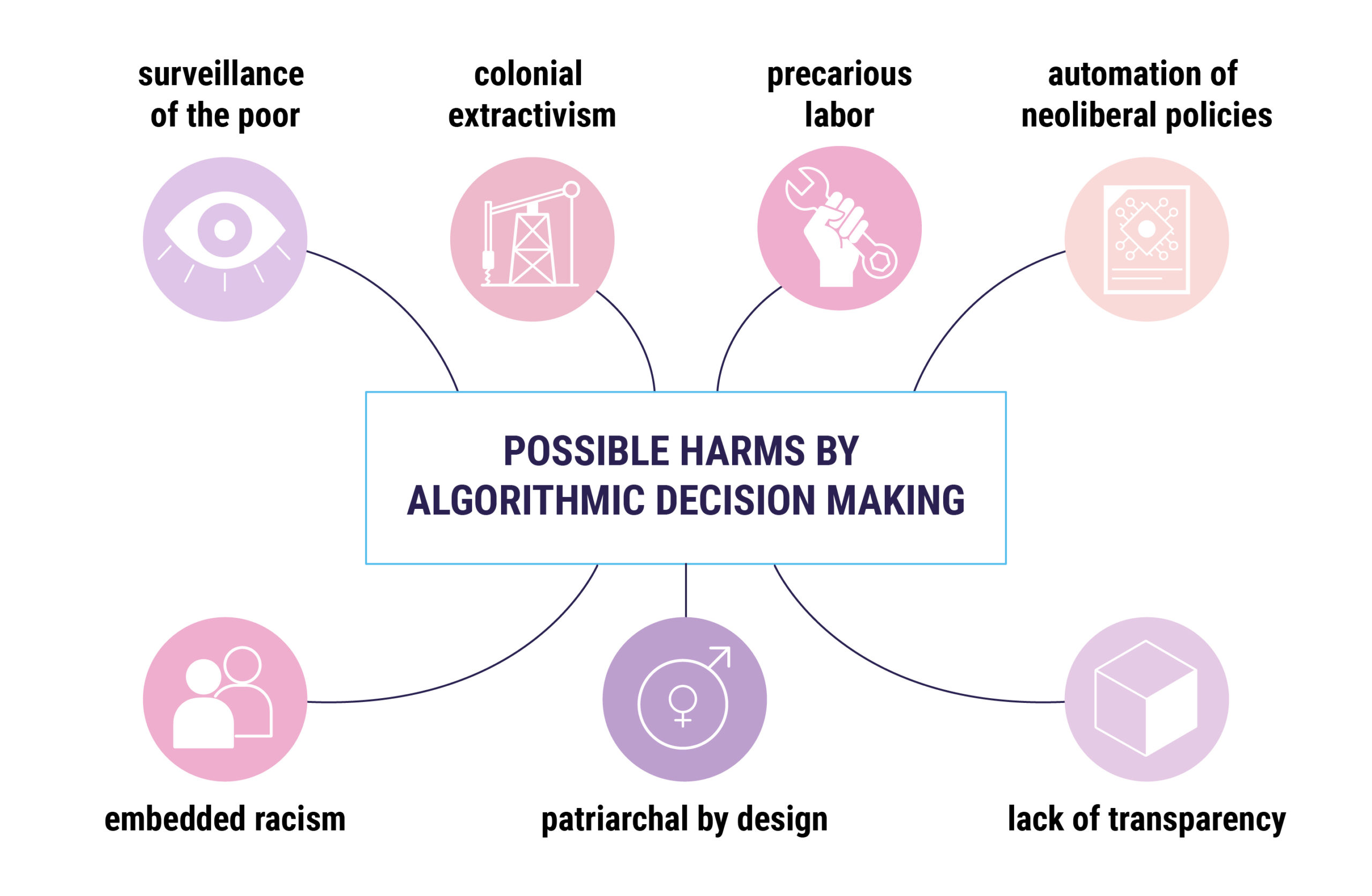

The current debate of A.I. principles and frameworks are mostly focused on “how to fix it?”, instead of to “why we actually need it?” and “for whose benefit”. Therefore, the first tool of our toolkit to question A.I. systems is the scheme of Oppressive A.I. that we drafted based on both, empirical analysis of cases from Latin America and bibliographic review of critical literature. Is a particular A.I system based on surveilling the poor? Is it automating neoliberal policies? Is it based on precarious labor and colonial extractivism of data bodies and resources from our territories? Who develops it is part of the group targeted by it or its likely to restate structural inequalities of race, gender, sexuality? Can the wider community have enough transparency to check by themselves the accuracy in the answers to the previous questions? Might be some of the questions to be considered.

OPPRESSIVE A.I. FRAMEWORK

Several national policies for A.I. and most start-ups and big tech corporations operate under the motto of “move fast and break things”, meaning, innovate first and check possible harms later. We propose the opposite: before developing or deploying, that A.I. should be checked if it is likely to automate oppression. Furthermore, if that A.I system is not focused on exposing the powerful, neither is targeting its own developers or their identity group, the developers are also not the ones to check if such a system falls into the categories of an Oppressive A.I.

Furthermore, those categories are not meant to fixed, they can expand according to a particular context, for instance: if an A.I. system is developed to access to public services but solely favors ableism, it can also be considered an Oppressive A.I. as it is excluding people with disabilities to access public services. So, watch out, the proposed Oppressive A.I. framework is not written in stone, it is just a general guide for questions, a work in process and shall be re-shaped according to the particular context and its oppressions. In this sense, we recall Design Justice Network Principles as an important guideline to assess the context of oppression, since it “centers people who are normally marginalized by design and uses collaborative, creative practices to address the challenged faces by a particular community.”

Furthermore, it doesn’t fail a contextualized check of Oppressive A.I, why not ask: can this be a transfeminist A.I.? What does it mean to draw a feminist algorithm?

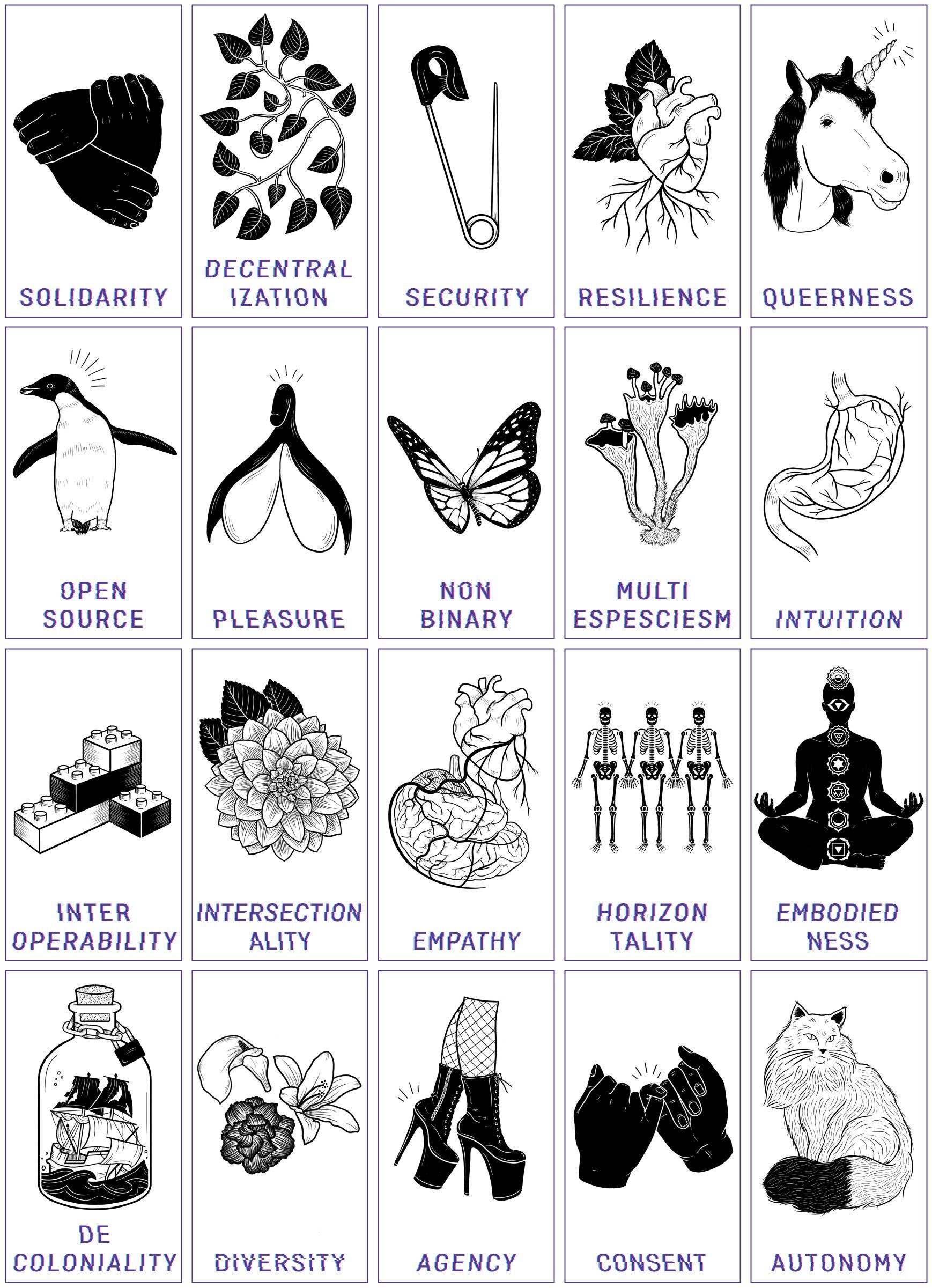

We believe that transfeminist values can be embedded in A.I. systems, just as currently values such as profit, addiction, consumerism, racism are embedded in several algorithms that pertain to our lives today. To push this feminist approach into practice, we at Coding Rights, in partnership with our dear and brilliant scholar and design activist Sasha Costanza-Chock, have been experimenting with a card game collaboratively developed to design tools in speculative futures: the “Oracle for transfeminist Technologies”. Through a series of workshops, we have been collectively brainstorming what kind of transfeminist values shall inspire and help us envision speculative futures. As Ursula Le Guin once said: “the thing about science fiction is, it isn’t really about the future. It’s about the present. But the future gives us great freedom of imagination. It is like a mirror. You can see the back of your own head.”

Indeed, tangible present proposals of changes emerged once we were imagining the future in the workshops. Over time, values such as agency, accountability, autonomy, social justice, nonbinary, cooperation, decentralization, consent, diversity, decoloniality, empathy, security, among others, have emerged in the meetings.

While it has been envisioned as a game, we believe that the ensemble of transfeminist values brainstormed over a series of workshops with feminists from different regions and identitary feminist agendas can also be transformed into a set of guiding principles towards envisioning transfeminist A.I. projects, alternative tech or practices that are more coherent with the present and future we wanna see.

That is what we want to do next. We have started with the article “Consent to our Data Bodies: Lessons from feminist theories to enforce data protection,” What is a feminist approach to consent? How can it be applied to technologies? Those simple questions were able to shed light on how limited is the individualistic notion of consent proposed in data protection frameworks. Universalized, it does not take into account unequal power relations. But, if we do not have the ability to say no to big tech companies when in need to access a monopolistic service, we clearly cannot freely consent. Layering down an extensive analysis of these values, as we did with the notion of consent can gradually turn the game into another analytical tool. That is one of the steps that we are taking next 🙂

We are currently brainstorming other possible steps to both amplify the reach and the tools of this toolkit. Some activities involve continuing testing its applicability through workshops, developing local partnerships for increasing case-based analysis, continuously studying new bibliography with critical approaches to A.I. systems, and seeking support for translations to Spanish and Portuguese. Also, if you know other A.I. projects being deployed in Latin America by the public sector with possible implications to advance feminist agendas, our mapping is collaborative and you can submit them here. If you have feedback about these frameworks, you can reach us at contact@codingrights.org